Senior Research Analyst in Omdia’s Components and Devices group, Carol Yang breaks down the primary sensors used in autonomous vehicles in her latest blog.

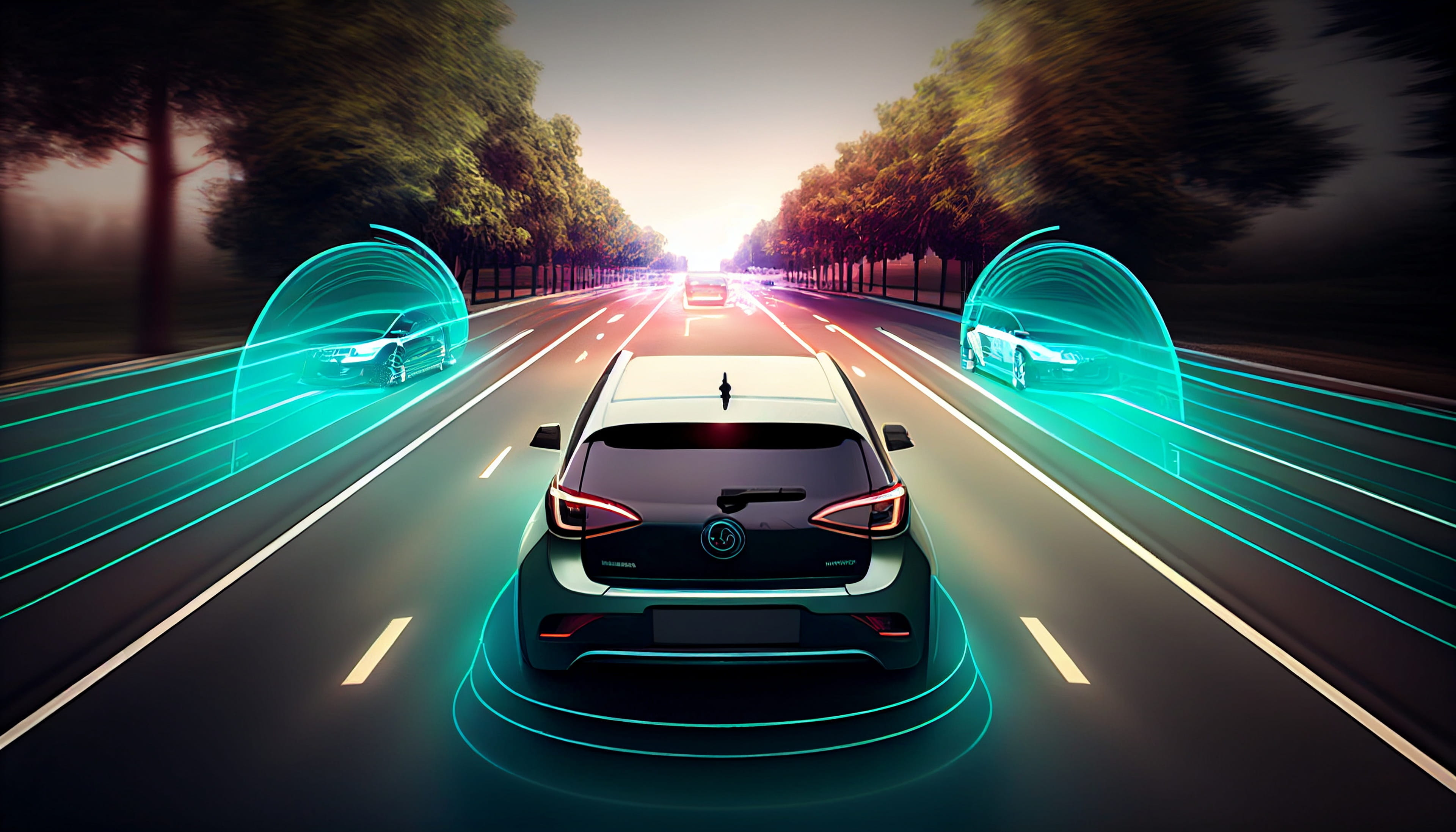

Sensing is the basis of intelligent driving algorithms and the source of execution. A robust, comprehensive perception of the environment is a prerequisite for the realization of autonomous driving. Currently, camera, millimeter wave radar, ultrasonic radar and LiDAR are the key sensors for autonomous driving. By facilitating the sensors, a vehicle is able to map itself in a complex driving environment.

1. Camera

The camera is the most indispensable sensor in vehicles today. It is mainly used in road environment perception outside the vehicle and driver or occupant monitoring inside the vehicle. Automotive manufacturers use visual detection to achieve Level 2 and above assisted driving functions such as Forward Collision Warning (FCW), Lane Departure Warning (LDW), Adaptive Cruise Control (ACC), and Autonomous Emergency Braking (AEB), etc. The number of cameras carried by vehicles is increasing. For example, Tesla’s autopilot hardware suite consists of eight cameras and the Nio ET7 has 11 cameras equipped. While current vehicles have 2.2 cameras equipped on average in 2019, we expect the number to double by 2026.

As the number of cameras increase, so do the requirements for camera performance. Automotive cameras are designed with higher resolution. Nio ET7 is equipped with 11 8MP cameras. Higher dynamic range is needed for capturing quality images in high contrast scenes. An automotive camera with a higher frame rate provides smoother video quality.

In addition, automotive cameras have some unique requirements, such as LED flicker mitigation technology to ensure that cars can correctly identify LED signals; and global shutter technology to avoid the "jelly effect" of images in high-speed motion.

2. Millimeter wave radar

Millimeter wave radar is a device that uses millimeter-wave to detect the distance of obstacles. As the level of autonomous driving continues to rise, millimeter wave radar will be widely used in Level 2 and above autonomous driving vehicles due to its cost advantage and stable working performance. It can work around the clock, which is a necessary supplement to camera sensors.

Millimeter wave radar is developing toward smaller size, higher accuracy, and longer detection range. The 24GHz products will be replaced by 77GHz gradually as higher frequency means higher performance, wider bandwidth and better resolution.

We expect an average usage of 5~8 millimeter wave radars in Level 3 and above autonomous driving systems, to achieve functions such as Blind Spot Detection (BSD), Lane Changing Assist (LCA), and Rear Collision Warning (RCA), etc.

3. Ultrasonic radar

Ultrasonic is one of the most commonly used sensors on conventional vehicles. It is a device used to transmit, receive and process ultrasonic signals and obtain information such as distance and position of the target. Ultrasonic sensors perform well in adverse weather conditions such as fog, rain, snow, and low light conditions, and are relatively inexpensive.

Thus, it is widely used in reversing radar and automatic parking system.

Disadvantages of ultrasonic radar include long response time, limited field of view, and lower accuracy. In addition, ultrasonic sensors have difficulty detecting small, fast-moving objects or multiple objects. In the long run, the ultrasonic radar will be partially replaced by the millimeter wave radar with better performance in high-level autonomous driving vehicles.

4. LiDAR

LiDAR is a system that emits laser beams and receives echoes to obtain three-dimensional and velocity information about a target. LiDAR has a longer detection range than conventional cameras and is more accurate in identifying static targets compared to millimeter wave radar. It is considered to be the most important sensor for Level 3 and above autonomous driving system. However, LiDAR can be ineffective during heavy rain or foggy weather. Due to the high cost, vehicles equipped with LiDAR will continue to be mainly high-end models in the current stage.

At present, hybrid solid-state solutions have higher maturity and are easy to pass vehicle regulations, so they have become the mainstream choice. In the long term, LiDAR will evolve towards miniaturized, high-resolution (64-channel, 128-channel and even 200-channel, etc.) and low-cost solid-state solutions. In addition, LiDAR-on-a-chip design is the future direction of development.

As each type of sensor has its limitations and cannot provide full information about the vehicle surrounding environment, there is a rising need for sensor fusion technology. Sensor fusion is to mix the information from various sensors (e.g., radar, cameras, LiDAR, or ultrasonic, etc.) in order to provide a complete view of the environment, so that ADAS/AD features can be enabled with enough confidence.

Omdia believes the demand for all types of sensors will set to accelerate in the coming decades with the progress in autonomous driving. And the evolving of autonomous driving will keep on pushing innovation in the sensor technology, driving the sensing performance to a higher level.

For more information on the automotive image sensor market, please click here.

More from author

More insights

Assess the marketplace with our extensive insights collection.

More insightsHear from analysts

When you partner with Omdia, you gain access to our highly rated Ask An Analyst service.

Hear from analystsOmdia Newsroom

Read the latest press releases from Omdia.

Omdia NewsroomSolutions

Leverage unique access to market leading analysts and profit from their deep industry expertise.

Solutions