The UK government’s highly ambitious AI Opportunities Action Plan (AI Action Plan – published 13/01/25) aims to harness AI productivity gains to boost the UK economy, and to make the country a magnet for AI investment and talent, and ultimately, a global leader in AI innovation. The formulation of national AI strategy for the UK is a welcome development and there are many commendable elements in AI Action Plan. It is comprehensive (backed by 50 recommendations) and spans the public and private sector, AI regulation, AI data needs, compute infrastructure and energy requirements, and the AI skills gap.

Co-ordinating an industrial AI and regulatory strategy of this magnitude is a massive challenge in terms of management, execution and funding, and is a long game that will outlive any single government. Moreover, aspects of the AI Action Plan are contentious and/or not always well thought through, notably: plans to unlock public data for AI training, the environmental implications of rapid datacentre expansion, risks that could arise from proposed measures to make AI regulations pro-innovation, no details of how the government will finance its plan beyond private investment, and how the drive for national AI sovereignty sits with private sector investment and control. Omdia interrogates these issues in a comprehensive report, but to kickstart the discussion, this blog focuses on unpacking and analyzing selected elements of the AI Action Plan, with an emphasis on responsible AI.

AI comes with challenges that are being played down

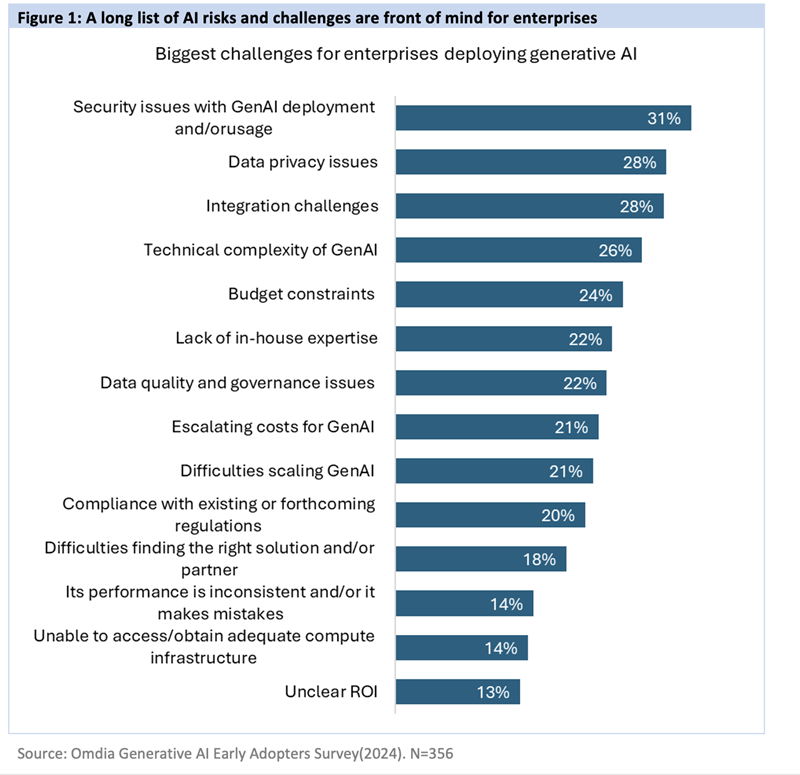

AI is a transformative technology that can deliver tangible benefits across multiple industries and business functions. Omdia’s 2024 AI Enterprise Market Maturity survey points to AI delivering Return on Investment (ROI) across all desired business outcomes from AI projects. For example, 21% of respondents report improvements in efficiency/automation. The UK government wants to attain benefits of this kind at a national level and uses bullish projections to suggest that AI could bring economic benefits to the country worth on average £47 billion annually over the next decade. However, the UK government gives the impression that AI is a magic bullet that can fix the country’s struggling economy and turbo charge growth. AI can help but it is not a panacea. AI also introduces a new wave of risks and challenges that the government AI Action Plan is glossing over or ignoring outright. Safety and security issues are a case in point and are two of the biggest concerns for both consumer and enterprise users, as evidenced by the Omdia survey data shown in Figure 1. The government makes passing references to safety and security in the AI Action Plan, but in broad, high-level terms.

Figure 1: A long list of AI risks and challenges are front of mind for enterprises

AI risk on the back burner

The government’s AI Action Plan acknowledges AI risks but once again at a high level and largely in terms of how it affects regulations. AI risk is a broader concept than safety and security alone, and while encompassing those issues also includes societal, economic, and existential risks posed by AI systems. AI risk in this sense is not prioritised in the AI Action Plan. This contrasts with the previous government that was on a self-appointed mission to mitigate the risks posed by highly advanced AI models, kick-starting the process with the UK AI Safety Summit in 2023, and the subsequent establishment of the UK AI Safety Institute. The priority for the current government is AI growth and innovation and UK global leadership therein, rather than leading a global effort to mitigate risks from highly advanced AI systems. In fact, a strong focus on mitigating risks from advanced AI arguably sits in tension with the current government’s focus on making the UK a hub for the development of frontier AI, which are defined as AI models that are more advanced than anything currently in existence.

Red flags for AI regulation and governance

The UK government’s goals for AI are contingent on what it calls a pro-innovation approach to regulation, and there are positive suggestions on this front including funding to help regulators improve their AI capabilities, the development of government approved AI assurance tools, and support for regulatory sandboxes. There is nothing wrong with regulatory frameworks that encourage innovation, provided it does not compromise a primary remit of AI regulation: to ensure AI is developed and deploying in ways that produce equitable and beneficial outcomes, eradicating or minimising the risk of negative consequences. But there are aspects of the government’s vision for pro-innovation regulation that are a cause for concern in this context. The government says UK regulators will have a ‘growth duty’ for AI in the UK, which suggests regulators will be tasked with supporting the government’s ambitious targets for AI fueled economic growth. This requires caution, as it could be interpreted as shifting the regulatory lens away from responsible AI and a duty to protect people, which in turn could undermine public confidence. Moreover, the government is considering establishing a central body that sits above regulatory authorities and that has powers to green light higher levels of AI risk tolerance in the interests of promoting AI innovation. The creation of such a central super regulatory authority is radical and controversial. It is tasking a body to regulate the regulators in ways that keep them on side with government ambitions, and this, coupled with the potential trade-offs in innovation versus risk, will rightly trigger push back from many quarters.

Sovereign AI aspirations will be eclipsed by private sector investment

The UK government wants to foster AI sovereignty, a scenario where the government has ownership of a nation’s AI resources (e.g., data, compute resources, AI technologies) and control over how AI assets are prioritised and allocated. However, the reality is that the UK government is not in a position to finance AI sovereignty (as is the case for many governments) and has little choice but to rely on private sector investments for its AI plan. Attracting private sector partners and investments for national projects is a competitive process and the government has to make the prospect appealing, but doing can spark controversy by introducing measures that raise ethical, social, economic, and political concerns. For example, the government has said that firms committed to developing frontier AI in the UK could be offered guaranteed access to energy resources, at a time when the UK energy grid is already under intense pressure.

The UK government needs to be mindful of the potential tension and conflicts of interest that can stem from heavy reliance on private sector investment, particularly from Big Tech. Big Tech firms are generous investors in government and public sector AI projects, which although beneficial to recipients cements ties and obligations, with the danger being that this segues into Big Tech influence over how government AI resources are prioritised. Big Tech firms already dominate AI infrastructure, to the concern of many regulatory authorities. This includes the UK’s Competition and Markets Authority (CMA) that is scrutinizing the market dominance of leading cloud infrastructure providers.

Unlocking public data is highly problematic

Data is a critical component in AI model training and inference and those with control over, and easy access to data resources have competitive advantage. The UK government wants to be in this position, and towards this intends to unlock data from the public and private sector that will be released to AI developers and researchers. Data assets will be managed by a new National Data Library (NDL), which will also be responsible for identifying high impact public datasets. Mention has been made of scientific data, and data from the NHS, but data in these domains is highly sensitive and release to commercial enterprises will cause concern. Meanwhile, unlocking data from the private sector brings its own complexity and challenges due to issues of ownership, privacy and intellectual property (among other things). The government says it will provide incentives to encourage researchers and industry to curate and unlock private datasets, but there is little detail on what the incentives entail (e.g. tax breaks, subsidies, bundled access to compute power) but incentives typically involve costs to those providing them.

The logic behind the NDL is understandable but the proposal is highly contentious. Data privacy and security are an ongoing issue in the AI domain, and front of mind for both consumers and enterprises (see Figure 1). The AI Action Plan talks about responsibly unlocking data and developing guidelines to support this, which is the bare minimum, and the lack of detail is not reassuring and suggests this initiative has not been thought through with the rigour it deserves.

More from author

More insights

Assess the marketplace with our extensive insights collection.

More insightsHear from analysts

When you partner with Omdia, you gain access to our highly rated Ask An Analyst service.

Hear from analystsOmdia Newsroom

Read the latest press releases from Omdia.

Omdia NewsroomSolutions

Leverage unique access to market leading analysts and profit from their deep industry expertise.

Solutions